I somehow missed the announcement for this when it was published in 2011, but here’s Anne Truitt Minimalism, Color Field, Morris Louis, Kenneth Noland, Bryn Mawr College, Psychology, James Truitt, edited by Eldon A. Mainyu.

It’s a 112-page collection of Wikipedia articles for $56. And it’s one of the 6,910 titles by Mainyu for sale at Barnes & Noble. It’s the on-demand publishing equivalent of Artisoo’s Amazon Art mashup of Google Images and Chinese Paint Mill. And it, too, caught Anne Truitt in its indexical merchandise net.

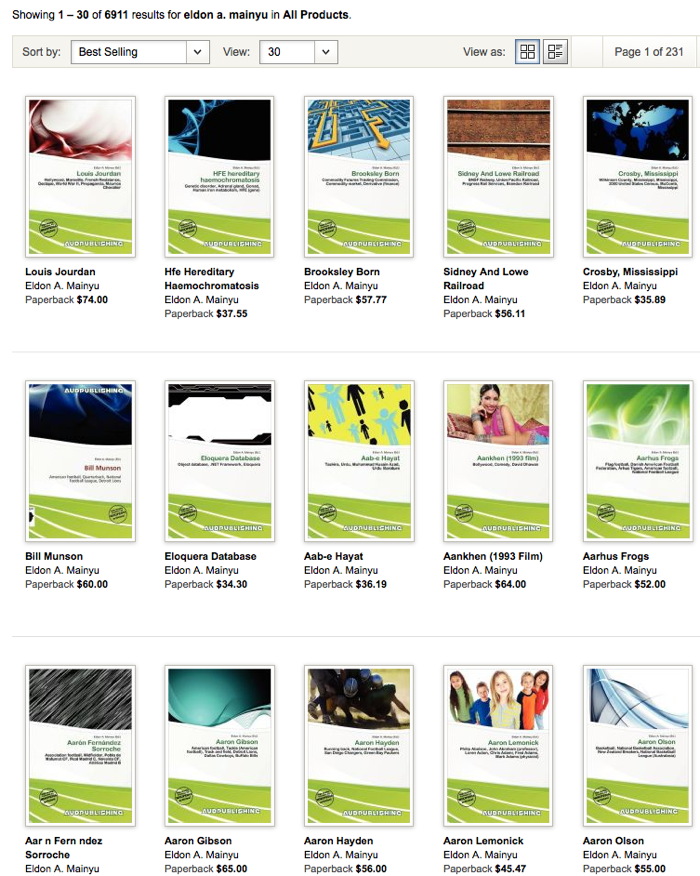

Whoops, he just cranked it to 6,911. Here are the bestsellers:

Which you’ll note veers to the alphabetical pretty quickly. I would guess that so far, BN has sold copies of seven Mainyu books.

Anyway, Mainyu’s not alone. He fronts Aud Publishing, which is just one of the 78 Wikipedia-centered imprints launched in 2011 by the Mauritius- and Germany-based book mill known as VDM Publishing. The division was apparently created and staffed by running the text of Foucault’s Pendulum into a Pynchon Name Generator:

Dismas Reinald Apostolis, Dic Press

Gerd Numitor, Flu Press

Agamemnon Maverick, Ord Publishing

Elwood Kuni Waldorm, PsychoPublishing

Indigo Theophanes Dax, The

The! Every one of them has several thousand titles and several hundred thousand Google results, tracing the invisible contours and channels of publishing’s automated datascape.

The crazy thing is, now I actually want to see some of these books, see how they turned out, but also see how they were spidered together. Because that Truitt title is not just an automated grab of the first ten links in the Wikipedia entry. Or at least it’s not now. Maybe it was in 2011.

What would assembling a more complicated or randomized chain of Wikipedia links yield? Is there a Six Degrees of Kevin Bacon-like connectedness between any and all bits of human knowledge [on Wikipedia]? Is there poetry or literature to be found/made there? Could an algorithm surfing through Wikipedia produce meaning or newness, or something beyond the temporary frisson of WTF juxtaposition?

What would assembling a more complicated or randomized chain of Wikipedia links yield? Is there a Six Degrees of Kevin Bacon-like connectedness between any and all bits of human knowledge [on Wikipedia]? Is there poetry or literature to be found/made there? Could an algorithm surfing through Wikipedia produce meaning or newness, or something beyond the temporary frisson of WTF juxtaposition?

I think of magazine projects like Maurizio Cattelan & Dominique Gonzalez-Foerster’s Permanent Food, which solicited and compiled tearsheets from around the world, in hopes of creating what Maurizio called a magazine with no personality. [I forgot ada’web also did a web version, Permanent Foam. 17 years later, I guess I should be more surprised that one of the 14 links actually still works.] Then there’s the more associational daisy chain of visuals that was Ruth Root’s wondrous press release for her 2008 show at Kreps. And of course, The Arcades Project.

So it seems entirely possible to make an engrossing read, maybe even a story, out of a Wikipedia surf session. Someone has to have done this already, right? But I guess the gantlet Mainyu has thrown down is to find the genius in the automation, to see if you can write the bot that creates the iconic literature of its time.

Skip to content

the making of, by greg allen