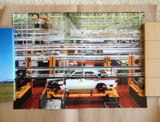

Blade Runner - Autoencoded: Full film from Terence Broad.

This is fascinating. Artist/computer scientist at Goldsmiths Terence Broad has created a film using a neural network. It "watches" and encodes a film frame by frame, then it re- or autoencodes the film from the resulting data. It's the data equivalent of printing from a negative, or casting from a mold. Except it is not a copy, per se, but a re-generation. Does that make sense? I'm trying to understand and explain it without resorting to cut&pasting his medium blog post about it. The point is, his network generated images from the encoded data that they'd been reduced to. For an entire film.

The film he chose for his algorithmic network to watch and re-create: Blade Runner. Broad goes into some of the philosophical reasons for choosing Blade Runner, but the best explanation comes from Vox, where Aja Romano reports that Broad's freshly generated film triggered Warner Brothers' DCMAbot, which temporarily knocked the film off of Vimeo. It was put back:

In other words: Warner had just DMCA'd an artificial reconstruction of a film about artificial intelligence being indistinguishable from humans, because it couldn't distinguish between the simulation and the real thing.It all reminds me of the work done at UC Berkeley a few years ago that reconstructed images from brain scan data taken with an fMRI. Which in turn reminded me of the dreamcam and playback equipment in Wim Wenders' Until The End of The World.

As Roy Batty said, "If only you could see what I've seen with your eyes." And now you can.

Autoencoding Blade Runner | Reconstructing films with artificial neural networks [medium/@Terrybroad]

A guy trained a machine to "watch" Blade Runner. Then things got seriously sci-fi. [vox]

Previously: The Until The End Of The World Is Nigh